My Favorite April Fools Joke Comes From AI

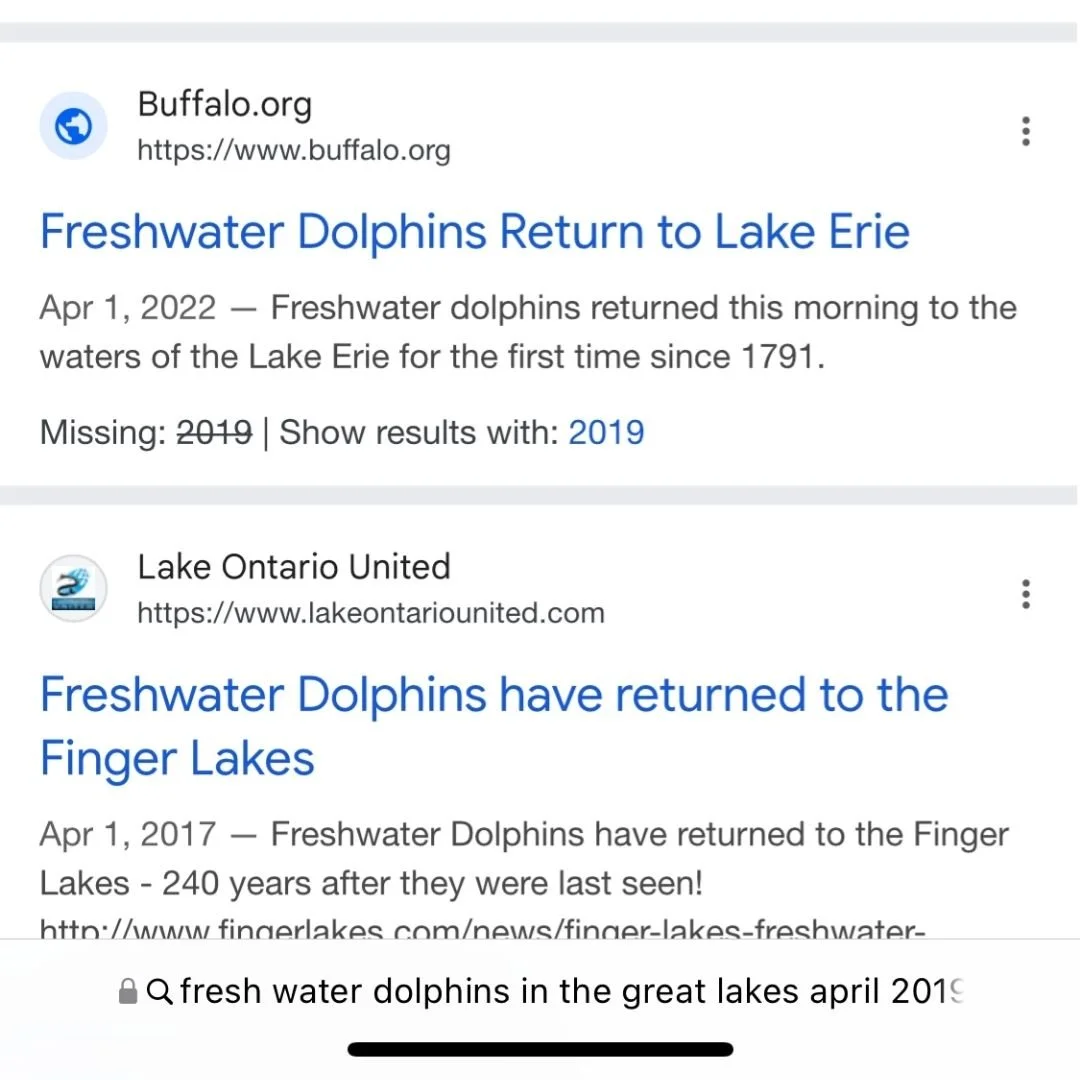

I don’t typically spend time on April Fools Jokes, but a fake story caught my attention last year. I haven’t been able to forget it since then. Someone on Facebook posted an article about Fresh Water Dolphins returning to Lake Erie. I knew it wasn’t real, but I couldn’t resist reading the post. I read the comments and saw the debate on validity. Then I saw a comment that if you search AI, it says dolphins are in the Great Lakes. At the time, that was true. I tried, and the Chatbot said, yes, freshwater dolphins are returning to the Great Lakes. I can’t find that AI response anymore, but you can find it in a Google Search.

So, that takes me down the road of the love-hate relationship with AI. It is great for getting ideas and suggestions, helping clean up grammatical errors, etc. I listen to many podcasts to learn and keep up with industry-related topics. It is funny to hear people share their opinions on AI. People say I use it for X, Y, and Z, but anything else is not ethical. But who draws that line? It is different for everyone. Many people use it for everything to create sites to drive revenue; others claim not to use an AI, and I believe many fall somewhere in the middle.

The Power of Believable Misinformation

What started as an April Fools’ joke years ago gained unexpected traction long after its original posting—all because AI began referencing it as if it were fact. The original author, likely posting for a laugh in 2017, probably never imagined their joke would resurface years later in debates over its validity.

But here’s the thing: AI presents information with a tone of authority. When people ask a question, they expect a direct answer—often assuming it to be true, especially when it comes from a seemingly knowledgeable source. In this case, the idea of freshwater dolphins isn’t entirely outlandish; after all, freshwater dolphins exist in other parts of the world. And let’s be honest—who wouldn’t love the idea of dolphins in the Great Lakes? The thought of swimming with them, free from the risks of sharks (or in some regions, alligators), makes for an oddly appealing fantasy.

Then there’s the comment section—the real battleground of internet misinformation. People will argue over anything, and before you know it, a joke turns into a full-blown debate. I even saw one comment simply say, Really?—a mix of skepticism and curiosity that perfectly captures how misinformation spreads.

This example highlights something bigger: AI, search engines, and online discussions all play a role in how false information circulates. Even when something starts as a joke, it can take on a life of its own. That’s why understanding how misinformation spreads—and how to recognize it—is more important than ever.

AI and the Spread of False Information

Artificial intelligence is trained to provide answers based on the vast amount of data available online. But here’s the problem: not all of that data is accurate. AI models don’t inherently “know” what’s true; they rely on patterns, probabilities, and existing information—some of which may be outdated, misleading, or entirely false.

This is how AI-generated misinformation can seem incredibly credible. If a piece of false information has been repeated enough times online, AI can pick it up and present it as fact. This happens because AI doesn’t always distinguish between well-researched sources and unreliable ones. If a website looks authoritative, an AI model might assume its content is trustworthy—even if it’s completely made up.

The implications of this are huge. Think about medical misinformation, historical inaccuracies, or financial scams. If AI confidently repeats misinformation, it reinforces false beliefs. And because AI responses often sound polished and well-structured, people are more likely to trust them without questioning their validity.

The case of the freshwater dolphins in Lake Erie is a perfect example. AI likely found references to this joke in multiple places online, which gave it the illusion of credibility. And once people saw AI “confirming” it, the misinformation gained even more traction. This raises an important question: How can we trust AI-generated information? The answer lies in understanding how different platforms handle misinformation.

Google vs. ChatGPT: Where Misinformation Lives

If you Google “freshwater dolphins in Lake Erie,” you might still find articles or forum posts discussing it, even though it’s not real. But if you ask ChatGPT the same question, you might get a disclaimer saying there are no dolphins in the Great Lakes. Why the difference?

The key lies in how these platforms process and present information.

Google acts as an aggregator. It doesn’t “fact-check” the search results it shows; it simply finds the most relevant web pages based on keywords. This means if a website publishes false information and it ranks well in Google’s algorithm, people can still find and believe it.

AI models like ChatGPT attempt to provide fact-based answers. OpenAI and other AI companies update their models to correct misinformation, remove outdated claims, and limit the spread of falsehoods. However, AI still relies on past training data, and not everything gets corrected immediately.

The problem? Neither system is perfect. Search engines can amplify misinformation, while AI-generated content can misinterpret data or present outdated knowledge as fact. The best approach is to use both tools critically and verify information from reliable sources.

How to Spot Misinformation Online

So, how do you avoid falling for online hoaxes? Here are some simple but effective ways to fact-check information before sharing it:

1. Check multiple sources

If something sounds surprising or too good to be true, look for confirmation from reputable news outlets, research institutions, or government agencies.

2. Use fact-checking websites

Websites like Snopes and PolitiFact are great for verifying claims, especially those related to science, politics, and viral stories.

3. Look at the original source

Where did the information come from? Was it from a verified expert, a credible organization, or just a random social media post?

4. Be skeptical of AI-generated content

If an AI chatbot provides information, don’t assume it’s 100% correct. AI is a tool, not a perfect source of truth.

5. Pay attention to social media debates

Just because people are arguing about something online doesn’t mean it’s real. Misleading information often spreads through heated comment sections and viral posts.

Taking a few extra minutes to fact-check can make a huge difference in stopping the spread of false information.

April Fools: Harmless Fun or Dangerous Trend?

April Fools’ Day has long been a time for lighthearted pranks, but in the age of social media and AI, misinformation spreads faster than ever. What was once an obvious joke can quickly turn into an internet myth.

Many companies and media outlets participate in April Fools’ pranks, but should they be more responsible with their jokes? Consider cases where misleading posts about government policies, scientific discoveries, or financial opportunities have caused confusion or even panic.

The reality is that some people don’t check the date on a post. Others might stumble across an old prank and take it seriously. In a digital world where misinformation already runs rampant, should brands and content creators think twice before publishing misleading content—even as a joke?

The Psychology Behind Viral Hoaxes

Why do people believe in things like freshwater dolphins in the Great Lakes? It comes down to psychology.

Wishful thinking – People want to believe in extraordinary, exciting things. If something sounds cool (but not entirely impossible), they’re more likely to accept it.

Nostalgia and folklore – Myths and urban legends often stem from a collective desire to preserve mystery. People enjoy the idea of hidden creatures, lost civilizations, and undiscovered phenomena.

Social reinforcement – When enough people say something is true, others tend to believe it—even without evidence. This is why misinformation spreads so easily in online communities.

Understanding these psychological factors can help us recognize why misinformation persists—and why we need to stay vigilant about what we accept as truth.

How Do You Use AI?

Misinformation is everywhere, and AI plays an increasing role in how we consume information. Whether it’s an April Fools’ joke taking on a life of its own or AI confidently stating a false claim, we all have a responsibility to be critical thinkers.

So, what do you think?

How do you use AI in your daily life? Have you ever encountered misinformation from an AI tool? What do you think is the “right” way to use AI responsibly?

Drop your thoughts in the comments—I’d love to hear your perspective!